Nectar - STING for Desktop

Nectar

Download Nectar Alpha

What is Nectar?

Nectar is the desktop app for STING. It gives you a native application for managing your knowledge and chatting with your documents—all running locally on your machine.

Local AI

Runs with Ollama. Your documents and conversations never leave your machine. No cloud, no subscriptions, no data sharing.

Honey Jars

Organize your knowledge into encrypted, searchable collections. PDFs, Word docs, Markdown—throw it all in and search with AI.

Bee AI

Chat with your documents. Ask questions, get answers with citations. Bee AI learns your content and speaks your language.

Works Offline

Once installed, Nectar works without internet. Perfect for sensitive work, travel, or just avoiding distractions.

Connect to STING Server

Optionally connect to a STING Server to access shared team knowledge while keeping a native desktop experience.

Passkey Auth

Secure login with Touch ID, Face ID, Windows Hello, or hardware keys. No passwords to remember or leak.

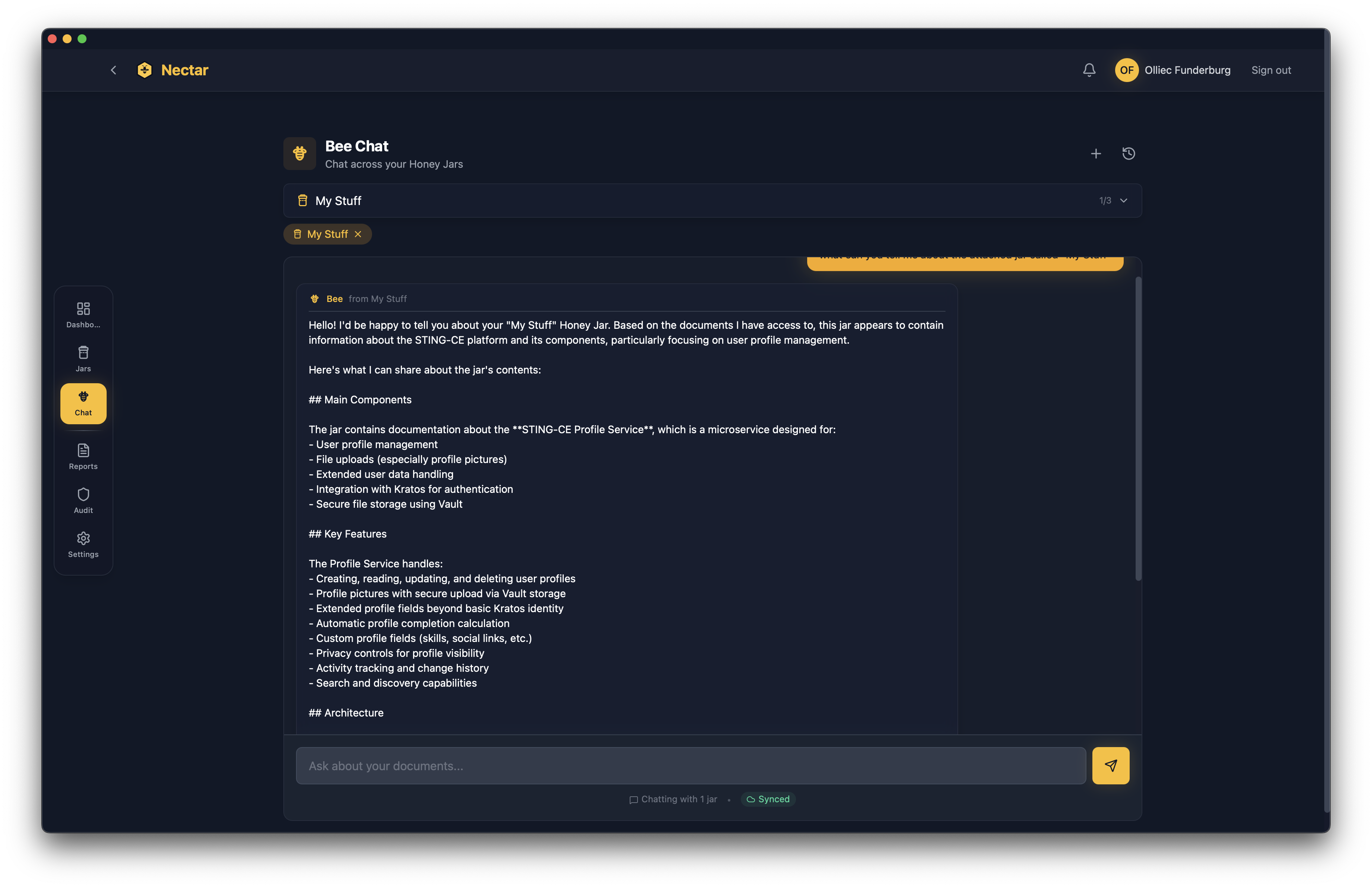

Screenshots

Bee Chat - Ask questions about your documents

Honey Jars - Your knowledge bases

Drag & drop document uploads

6 built-in themes - from Modern Glass to Retro Terminal

Requirements

| Component | Minimum | Recommended |

|---|---|---|

| RAM | 8GB | 16GB+ |

| Storage | 10GB free | 20GB+ SSD |

| CPU | Dual-core | Quad-core+ |

| GPU | Not required | Helps with larger models |

Nectar runs Docker containers locally. First launch will download ~1.5GB of container images. AI models via Ollama add 2-8GB each depending on model size.

Prerequisites

Docker - Nectar runs STING services in Docker containers. Install Docker first:

- macOS: Docker Desktop for Mac

- Windows: Docker Desktop for Windows

- Linux: Docker Engine or Docker Desktop

Ollama - For local AI models. Install after Docker:

macOS / Linux:

curl -fsSL https://ollama.com/install.sh | shWindows: Download from ollama.com

Ollama will download AI models as needed when you first use Nectar.

Installation

- Download the

.dmgfile - Open the downloaded file

- Drag Nectar to your Applications folder

- First launch: Right-click → Open (to bypass Gatekeeper for unsigned apps)

- Grant permissions when prompted (accessibility, files)

Note: Nectar is currently unsigned. macOS will warn you on first launch. This is normal for alpha software.

- Download the

.exeinstaller - Run the installer

- Windows SmartScreen may warn about an unrecognized app—click "More info" → "Run anyway"

- Follow the installation wizard

- Launch Nectar from the Start menu

Note: Nectar is currently unsigned. Windows will show a SmartScreen warning. This is normal for alpha software.

Debian/Ubuntu (.deb):

sudo dpkg -i Nectar.deb

sudo apt-get install -f # Install dependencies if neededAppImage:

chmod +x Nectar.AppImage

./Nectar.AppImageNote: You may need to install additional dependencies for WebKit on some distributions.

Quick Start

- Install Docker (see prerequisites above)

- Install Ollama for local AI models

- Download and install Nectar for your platform

- Launch Nectar - Docker containers will start automatically on first run

- Create a Honey Jar and add some documents

- Start chatting with Bee AI about your documents

First launch takes a few minutes while containers download. After that, startup is fast.

FAQ

Everything stays on your machine:

- macOS:

~/Library/Application Support/Nectar - Windows:

%APPDATA%\Nectar - Linux:

~/.local/share/nectar

No cloud sync, no telemetry, no data leaving your device.

Coming soon. We're actively working on STING Server sync, which will let you:

- Access shared team Honey Jars

- Use server-side AI models

- Keep your local work while accessing team knowledge

For now, Nectar works fully standalone. Check the roadmap for updates.

Local models via Ollama:

- Qwen 2.5 - Good all-around model

- Phi-4 - Microsoft's efficient reasoning model

- Llama 3.3 - Meta's latest

- Any model Ollama supports

Cloud providers (optional):

- OpenAI - GPT-4o, GPT-4, etc.

- Anthropic - Claude 3.5 Sonnet, Claude 3 Opus

- Any OpenAI-compatible API endpoint

PII Protection: When using cloud providers, Nectar's PII layer automatically detects and scrambles sensitive data (SSNs, credit cards, phone numbers, etc.) before it leaves your machine. Responses are de-scrambled automatically. Your sensitive data never reaches the cloud.

Yes. Nectar is free to use with no subscriptions, no usage limits, and no feature gates. You own your data completely.

The Nectar source code is maintained in a private repository, but the application itself is free to download and use.

This is alpha software—bugs are expected!

Include your OS, Nectar version, and steps to reproduce.

Need the Server Version?

Nectar is for personal use on a single machine. If you need multi-user access or team features, check out STING Server.